BACKUP AND RESTORE PROCEDURES IN HADOOP

Backup:

There is a common misconception that Hadoop protects data loss.

Hadoop only replicates the data by three times (Default), and it is not completely safe.

Backups protect against worst-case disaster situations and logical data corruption.

Reasons to backup your data:

- Backup is required to prevent the accidental loss of data.

- Reconstructing the loss of data could become expensive or in some cases you cannot reconstruct at all.

- To avoid the application downtime backup is important.

- To prevent the accidental loss of data due to the various reasons like hardware failure, disk failure, corrupted data, application/user errors, loss of data center, power and network outage.

What to backup in Hadoop:

- There are different data sets available in Hadoop to backup and the company should be aware of what to backup.

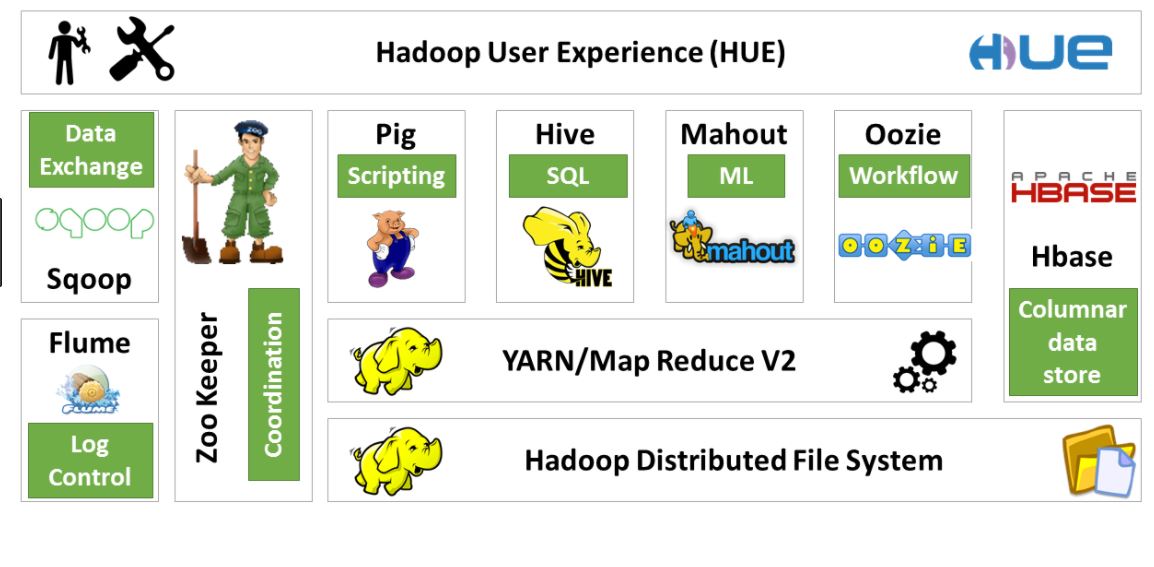

- Metadata of the data is more important than any other data; this may include metadata of namenode, hive, hbase etc.

- Complete cluster data can also be backed up based upon the economic norms of the company’s policy.

- Databases data like mysql, postgres or oracle dB is also important to backup.

Restoring or Recovery:

Data is recovery is an important task for an organization which suffered from data loss to avoid the application downtime.

In order to do a data recovery, one should have the same environment setup as the previous cluster.

And also an organization should look into all the required security groups and firewall permissions to access the environment and as well as the sufficient bandwidth.

There are different time based operations that are performed in order to perform a recovery and it can be calculated through procedure like RTO/RPO.

Recovery time Objective (RTO):

Defining the RTO is nothing but setting up the target time for recovery and restoration of any data loss in your business after a disaster has struck.

It also refers to the point in time in the future at which you will be up and running again.

Recovery point Objective (RPO):

RPO refers to the point in time in the past to which you will recover.

RPO is determined by looking at the time between data backups and the amount of data that could be lost in between backups.

How to know your RTO/RPO?

- The length of time between data backups.

- The amount of data that could potentially be lost within that time.

Who takes the decision of RTO/RPO?

Company’s stakeholders and business line owner’s calculates in which band you are in and then calculates the time based on the bands.

Usually the bands will range from one min to one month based on the organization.

Backup Procedures:

NFS:

Create a NFS and backup all the metadata to that remote server periodically.

This can also be managed as a batch job to perform daily when there is less number of users accessing the cluster.

Snapshots:

In recent updates every Hadoop flavors has an option to take the snapshots of the cluster’s current state, and then storing them in a remote location.

Taking snapshots can also be automated as a daily job and then move them to a secure different location.

BDR Site:

Backup and disaster recovery site is nothing but having a different data center with same environmental configuration in a different location.

While ingesting the data into the cluster, simultaneously replicate the same data to the BDR site, so that you have two cluster’s in handy.

In this case the RTO can be less than a min.

Cloud solutions:

Moving the data to the cloud is relatively a new concept.

Organizations are now using the cloud in slow form despite the cost and scalability benefits.

All the data can be backed up to the block storage like S3, Big query and other storage services.

Vinod

Nice Article.

anil

good information

ravi

nice data

Raju babu

Good Information

Aditya

Good Documentation

Manikanta

Super Artical and it is so usefull

sai

good data

Mohanarao

Very interesting and useful

Anandarao

The article is very useful